Understanding the Real Impact of Thermal Management on GPU Reliability

The relentless growth of artificial intelligence is pushing the boundaries of computing power, and at the heart of these advances lies a quiet but critical struggle: keeping the machines cool. As GPUs shoulder ever-increasing workloads to train sophisticated models, the heat they generate presents a significant challenge, threatening reliability and efficiency. Addressing this issue is no longer a technical afterthought – it is a business imperative.

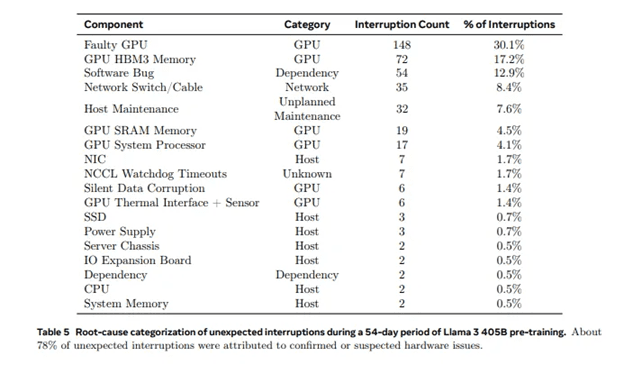

Take Meta’s Llama 3 405B, for instance. Over the course of 54 days, Meta’s AI infrastructure – some of the most carefully engineered in the world – experienced daily GPU failures. Meta experienced nearly eight unexpected failures a day over this training run, and roughly four of those eight (58.7%) were attributed to GPU failures – a reminder of how even the most advanced systems face hardware limitations. While the challenges of AI training are well-documented, these failures underscore a broader truth: managing high-powered GPUs in dense server setups is an uphill battle.

(Image credit: Meta)

As you can see, compared with other components (especially CPUs and system memory), it’s clear AI training puts distinctively intense pressure on the GPUs and as a result, they fail.

But why does that matter?

Simply put, replacing more than 200 GPUs requires substantial capital investment, and to make matters worse, every failure reduces training infrastructure ROI.

The High Stakes of GPU Reliability

When a GPU fails in an AI training cluster, the ripple effects are both immediate and expensive. Industry experts estimate that GPU downtime costs can range from $500 to $2,000 per hour per node. Beyond direct replacement costs, the operational toll is considerable:

- Training Delays: Failures often mean restarting from a checkpoint, losing hours or days of progress.

- Lost Productivity: Engineers and researchers sit idle, with hundreds of person-hours lost for each incident.

- Escalating Costs: The total cost of diagnosing and resolving a GPU failure can reach $25,000 when factoring in downtime, replacement, and validation.

- Efficiency Loss: A 1% drop in cluster utilization across a deployment of 1,000 GPUs (distributed across 125 servers costing $350,000 each) would result in an annual financial impact of approximately $437,500. This emphasizes the significant cost associated with even minor inefficiencies in GPU utilization.

These numbers paint a stark picture: GPU failures are not just technical inconveniences—they’re financial liabilities.

So what was the problem with the GPUs? Why did they fail so often? Was it a design flaw in the GPUs?

Probably not. Here’s a quote from the report that offers a clue.

“One interesting observation is the impact of environmental factors on training performance at scale. For Llama 3 405B , we noted a diurnal 1-2% throughput variation based on time-of-day. This fluctuation is the result of higher mid-day temperatures impacting GPU dynamic voltage and frequency scaling.”

Heat: A Silent Saboteur

Temperature fluctuations, often overlooked, play a pivotal role in GPU reliability. Meta’s experience with performance variations linked to mid-day heat is far from unique. Across data centers, diurnal temperature patterns drive operational challenges:

- Average daily temperature variations can degrade server performance: Fluctuations in temperature can lead to thermal stress on server components, potentially causing performance degradation and hardware failures.

- During summer peaks, increased temperature deltas lead to increased fan power consumption: Higher ambient temperatures cause server fans to operate at higher speeds to maintain optimal temperatures, resulting in increased energy consumption.

In dense GPU deployments, the problem compounds. Even with state-of-the-art fans, maintaining optimal temperatures is a formidable challenge.

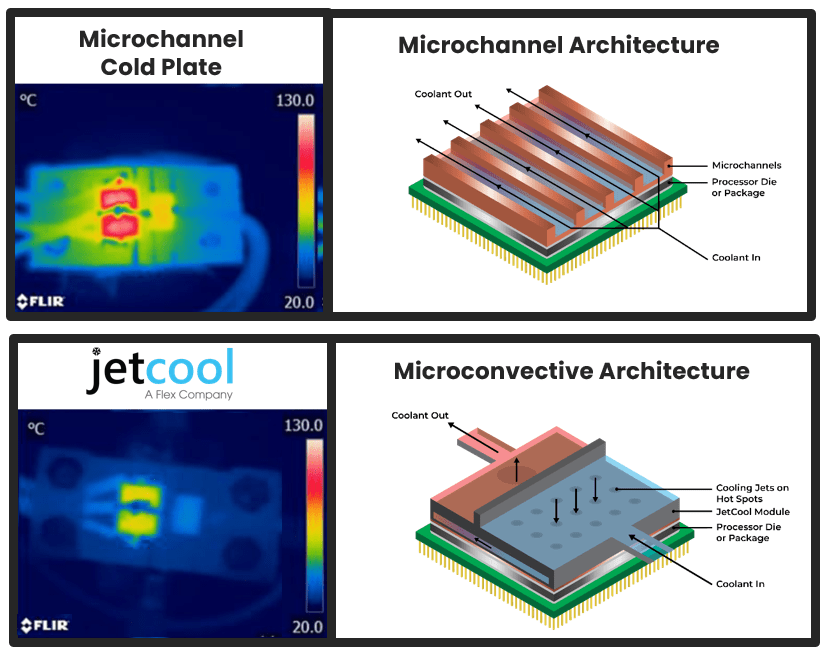

Rethinking Cooling: From Air to Liquid

Traditional air cooling systems, though ubiquitous, struggle to keep pace with modern GPUs. Thermal imaging reveals why: non-uniform heat distributions create hot spots that air simply cannot target effectively. The consequences include:

- Localized Overheating: Hot spots can exceed safe operating temperatures, triggering performance throttling.

- Thermal Stress: Uneven cooling causes mechanical wear, accelerating failure rates.

- Inefficiency: Air cooling maxes out at 400-500W TDP, well below the demands of today’s GPUs.

Extending GPU Lifespan

Temperature and reliability are closely intertwined. Research shows that every 10°C reduction in operating temperature doubles a semiconductor’s lifespan. JetCool’s ability to lower GPU temperatures by 30°C compared to air cooling translates to an 8x improvement in theoretical lifespan. This is where JetCool’s microconvective liquid cooling technology comes into play. By addressing heat at its source, JetCool offers a transformative solution:

- Precision Targeting: Microjet impingement cools GPU hot spots with pinpoint accuracy.

- Thermal Uniformity: By eliminating heat gradients, JetCool ensures consistent performance across the GPU.

- Scalable Efficiency: Capable of handling power densities exceeding 1,500W per socket, JetCool is built for the future.

For organizations heavily invested in AI infrastructure, these gains are not just technical—they’re strategic.

The Numbers Behind the Innovation – JetCool Case Study

Comprehensive testing of NVIDIA H100 GPUs underscores the transformative impact of JetCool’s microconvective liquid cooling technology. When evaluating thermal resistance, a critical metric for heat transfer efficiency, air cooling systems fall significantly behind with a thermal resistance of 0.122 °C/W compared to JetCool’s remarkably low 0.021 °C/W for the NVIDIA H100 and 0.013°C/W for the NVIDIA GB200. This substantial improvement not only ensures better cooling performance but also enhances GPU reliability under sustained workloads.

The advantages extend far beyond thermal resistance. In large-scale deployments, such as a fleet of 2,000 GPUs valued at $33 million, traditional direct liquid cooling (DLC) systems can drive annual power costs of $2 million. JetCool’s technology offers a smarter alternative, cutting cooling energy use by up to 30% over conventional liquid cooling systems. By reducing facility cooling demands, JetCool lowers operational expenditures, scales AI growth without increasing energy consumption, and minimizes the environmental footprint of AI workloads.

Even under challenging conditions, such as operating with a warm 60°C PG25 coolant, JetCool ensures GPUs stay safely below throttling limits. This guarantees reliable performance in the most demanding environments, enabling data centers to maintain efficiency while meeting the rising computational needs of AI-driven workloads.

A Smarter Way Forward

The growing demands of AI require a reimagining of how we cool the hardware driving innovation. JetCool’s microconvective liquid cooling represents a critical evolution, addressing the heat challenges of today’s GPUs with unmatched precision and efficiency.

As data centers push toward higher densities and greater power efficiency, solutions like JetCool offer a path forward—one where performance, reliability, and sustainability are no longer competing priorities but aligned outcomes. Learn more about JetCool’s cold plate solutions, here. Learn more about Flex’s power module solutions, here.