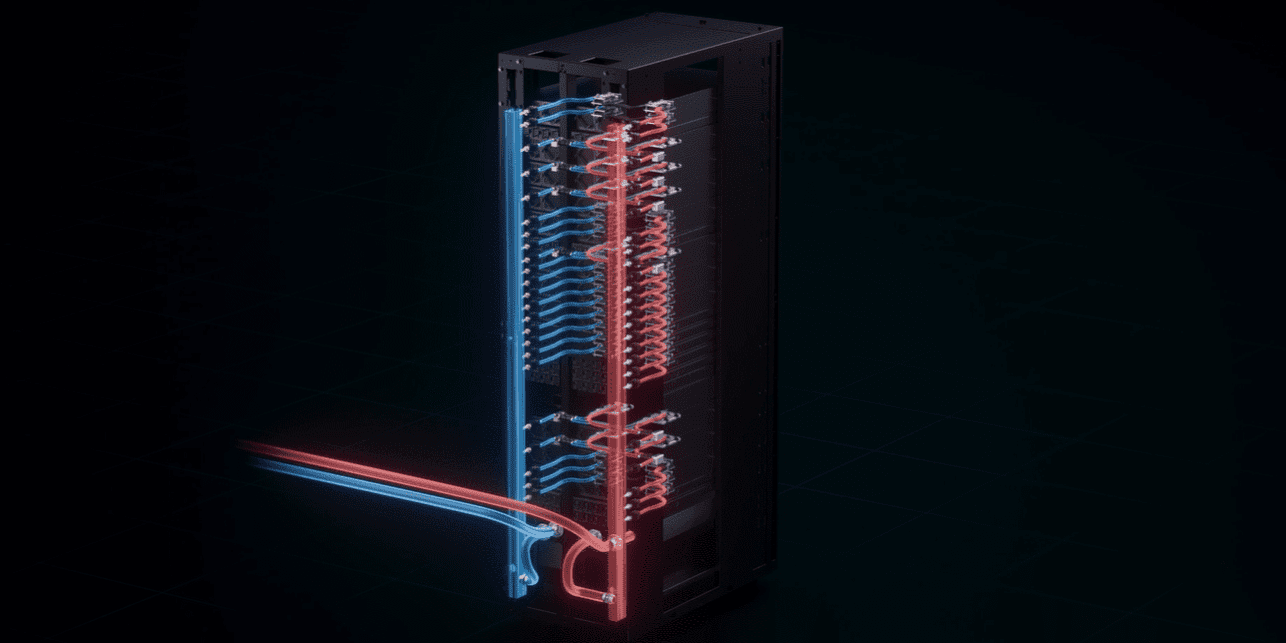

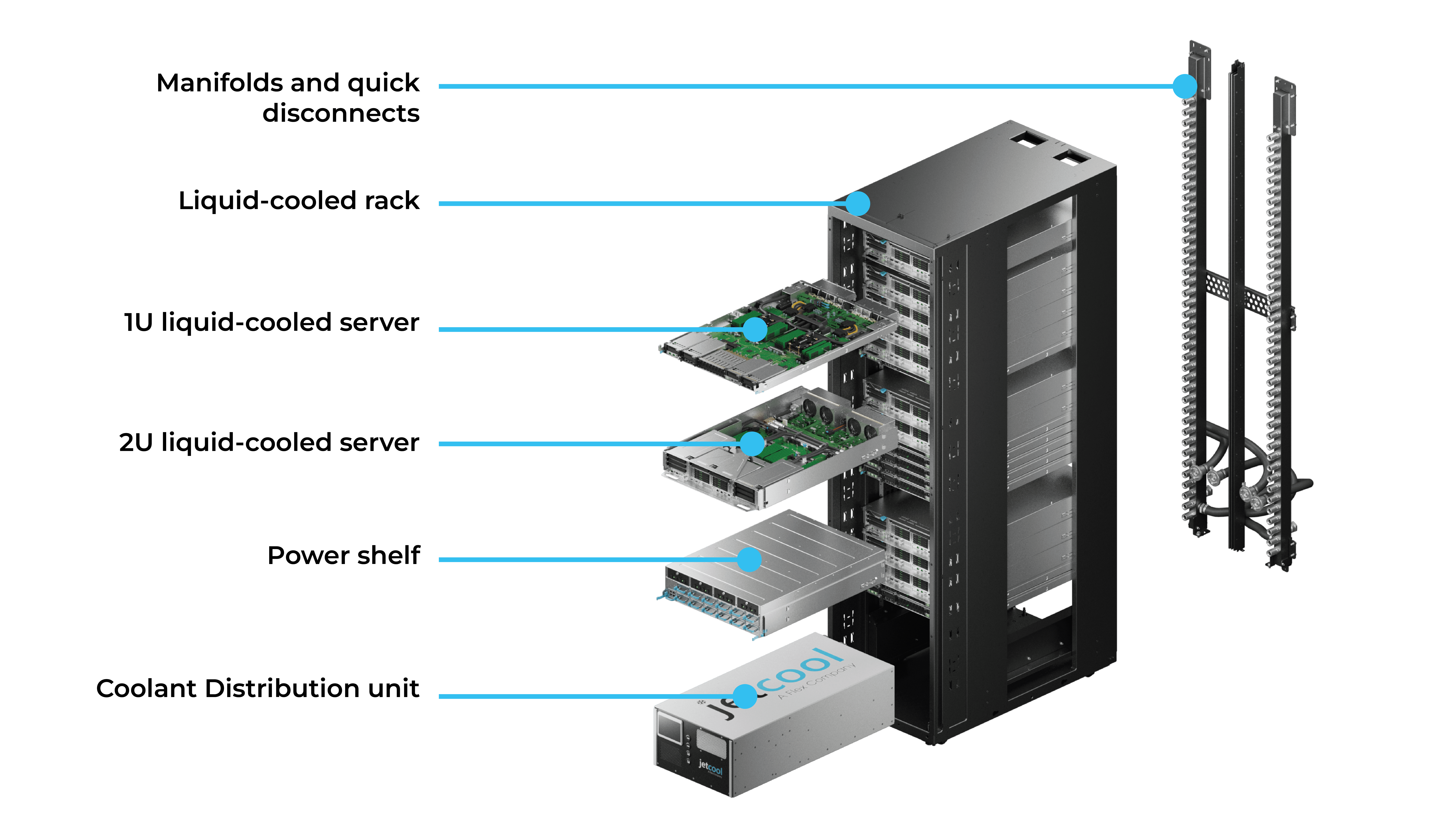

As rack densities reach 50kW+, direct liquid cooling is one of the leading solutions managing AI-driven heat loads in modern data centers, as notes Uptime Institute. When we think of liquid cooling, we think of three components: plates, pumps, and pipes. Traditionally, design conversations focus on the heat-rejecting components—cold plates and coolant distribution units (CDUs)—as the core of the architecture. However, the often-overlooked ancillary components, such as in-rack manifolds and quick disconnects (QDs), play a critical role in ensuring scalability, reliability, and efficiency. In this blog, we’ll explore why selecting the right supplier of manifolds and QDs can make or break your liquid cooling strategy.

What is a Liquid Manifold in Data Center Cooling?

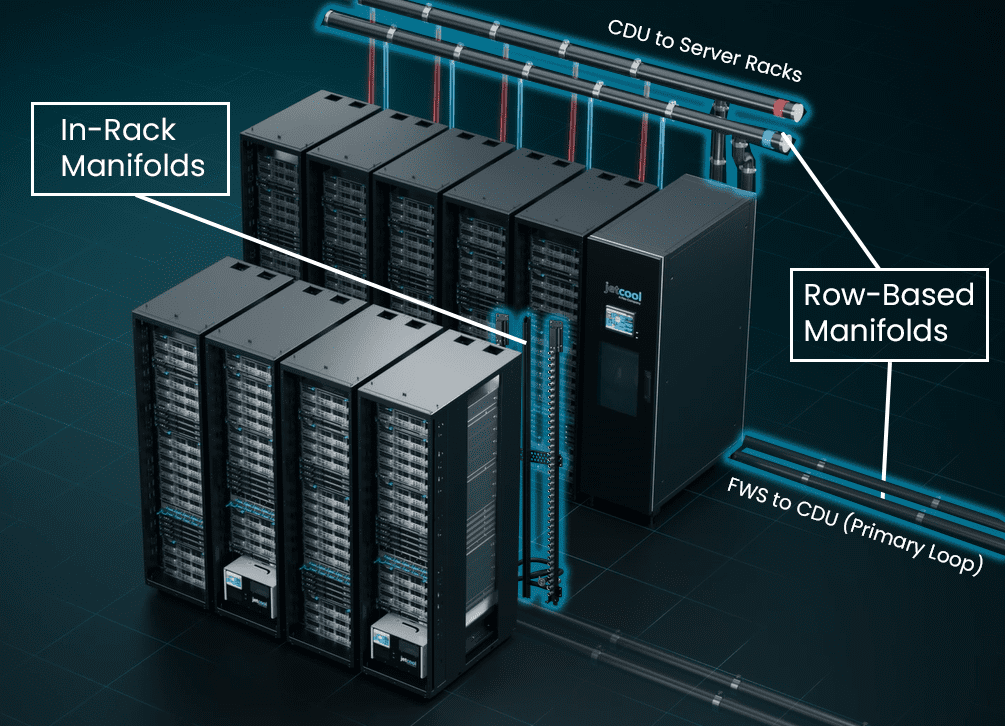

Manifolds generally come in two configurations:

- In-Rack Manifolds: Mounted to the server rack to distribute coolant directly to servers. Vertical manifolds are typically mounted to the back of the rack while horizontal manifolds can be mounted to the front.

- Row-Based Manifolds: Piping assemblies positioned above or below the floor to distribute coolant across multiple racks.

Both types interface with coolant distribution units (CDUs), delivering flow between the IT loop and the facility water system (FWS). Row-based manifolds are a key part of the secondary fluid network (SFN)—also known as the technology cooling system (TCS)—which bridges the gap between CDUs and the cold plates inside servers.

What are Universal Quick Disconnects (UQD)?

Quick disconnect couplings enable fast, tool-free connections and disconnections, making maintenance and hot-swapping servers more efficient. High-quality quick disconnects also minimize leakage and pressure drop, preserving system integrity and thermal performance in high-density environments.

Universal quick couplings come in different finishes, sealing mechanisms, and connection styles (such as bidirectional or blind mate), as well as various diameters to support different flow requirements. For high-density platforms, larger QDs and ports are typically selected because they allow greater coolant volume to pass through without requiring excessive pump pressure or dramatically increasing flow rate. This reduces pressure drop and improves efficiency, especially when paired with larger hoses in systems designed for high-power processors.

Design Consideration #1: Flow Distribution and Pressure Drop

These thermal fluidic principles are closely linked: as flow rate increases, pressure drop rises. In a system where coolant must circulate continuously, selecting manifolds and quick disconnects that optimize—not burden—your CDU’s pumping power is essential. This requires careful alignment between manifolds, QDs, CDUs, and cold plates to ensure fluidics remain balanced and compatible across the entire system—designed for long-term, 24/7 deployment.

Design Consideration #2: Cold Plate Architecture Requirements

Each processor introduces unique thermal outputs and localized heat flux, making cold plate design one of the first engineering considerations. That’s why it’s critical to select manifolds with the correct port sizes and quick disconnects that support the cold plate’s requirements. In the AI era, a common design guideline is to target a flow rate-to-heat dissipation ratio of approximately 1.5 LPM per kilowatt (kW). Another typical range is 1 to 5 LPM per cooling module, depending on the application, with high-powered GPUs often requiring 1 to 3 LPM for optimal performance.

Another key factor is coolant inlet temperature. Depending on your cooling vendor, inlet temperature can vary significantly. The Open Compute Project (OCP) in-rack manifold guidelines recommend supporting a wide temperature range (17°C–75°C), as coolant temperature depends on multiple variables—processor type, IT stack, cooling architecture, and overall power density.

Design Consideration #3: Rack Standards, Materials, and Serviceability

As racks evolve, operators must navigate new design parameters within tight spatial constraints. Manifolds are the primary medium for distributing coolant to server racks, so their design has a significant effect on system performance. Open standards like OCP-compliant ORv3 racks are gaining adoption to improve compatibility across vendors and components.

Material selection is another critical factor. Wetted vs. dry materials influence corrosion resistance, coolant compatibility, and long-term reliability. For greenfield data centers, choosing the right materials is essential for efficiency and durability over the system’s lifecycle.

Finally, serviceability plays a major role in manifold and quick disconnect design. Operators need fast, spill-free maintenance and hot-swapping without downtime. Poor design here can lead to leaks, pressure loss, and extended outages—adding unnecessary risk and cost.

Conclusion: Supporting High-Density Server Cooling with Manifolds and Quick Disconnects

Add in a paragraph about how interoperability and guaranteeing compatibility is essential, that’s why it’s important to choose a partner and supplier that provides the full solution from cold plates to CDUs to manifolds. Read more about Flex’s liquid cooling offering in our eBook.