Data center operators are facing unprecedented AI compute demands forcing them to rethink infrastructure, starting at the rack. No longer a passive enclosure, the modern rack is evolving into a complex, integrated system where power delivery, cooling systems, and component compatibility must be co-engineered from day one.

Barriers to Scaling AI Infrastructure

- Thermal Headroom Is Running Out: Traditional air cooling can’t keep up with AI workloads, leading to throttling and reduced hardware lifespan. Liquid cooling is essential but requires precise engineering and infrastructure.

- Power Delivery Isn’t Keeping Up: AI racks pushing 600kW–1MW strain legacy AC systems. Without DC distribution and integrated energy solutions, scalability and efficiency suffer.

- Global Consistency is Difficult: Regional differences in vendors, standards, and site capabilities turn each deployment into a custom project—raising costs and complexity.

- Integration Complexity: The tech exists, but the ecosystem lacks standardization. Blurred lines between IT and facilities—especially with cooling—cause delays and risk.

The Result: Integration Paralysis Sets In

Future-ready racks call for compatible components, streamlined vendor coordination, and validated performance across diverse environments. Without common standards or tight supply chain integration, projects can take longer than expected and require additional design cycles.

Instead of slowing down progress, organizations are increasingly looking for integrated solutions that minimize complexity, reduce time to deployment, and keep teams focused on scaling infrastructure rather than troubleshooting integration.

The Evolution of Rack Design in the Age of AI

That rethink is now reshaping operational models. Previously, operators could assemble racks from a mix of vendors—servers from one, power distribution from another, cooling added as needed. But that piecemeal approach is not viable. The demands of AI—extreme power density, precise thermal management, and spatial constraints—require tight integration across all rack subsystems.

And we’re already seeing this shift. Platforms like NVIDIA’s MGX rack and Mount Diablo reflect a new design philosophy—where racks are no longer assembled as a collection of parts but pre-integrated ecosystems. As demands grow, the question isn’t if infrastructure should evolve, but how that evolution can be delivered at scale.

The Turnkey Rack Alternative

This model offers several advantages:

- Faster time-to-value: Ready-to-operate systems reduce deployment timelines.

- Reduced complexity: Upstream integration minimizes vendor coordination and on-site troubleshooting.

- Predictable performance: Factory validation ensures systems function as intended, reducing on-site troubleshooting.

- Versatile Device Integration: Designed to accommodate 3U, 4U, and 6U height units.

- Scalability: Standardized designs support consistent replication across diverse global sites.

By addressing integration challenges at the source, turnkey racks offer a more streamlined and scalable path for deploying AI infrastructure.

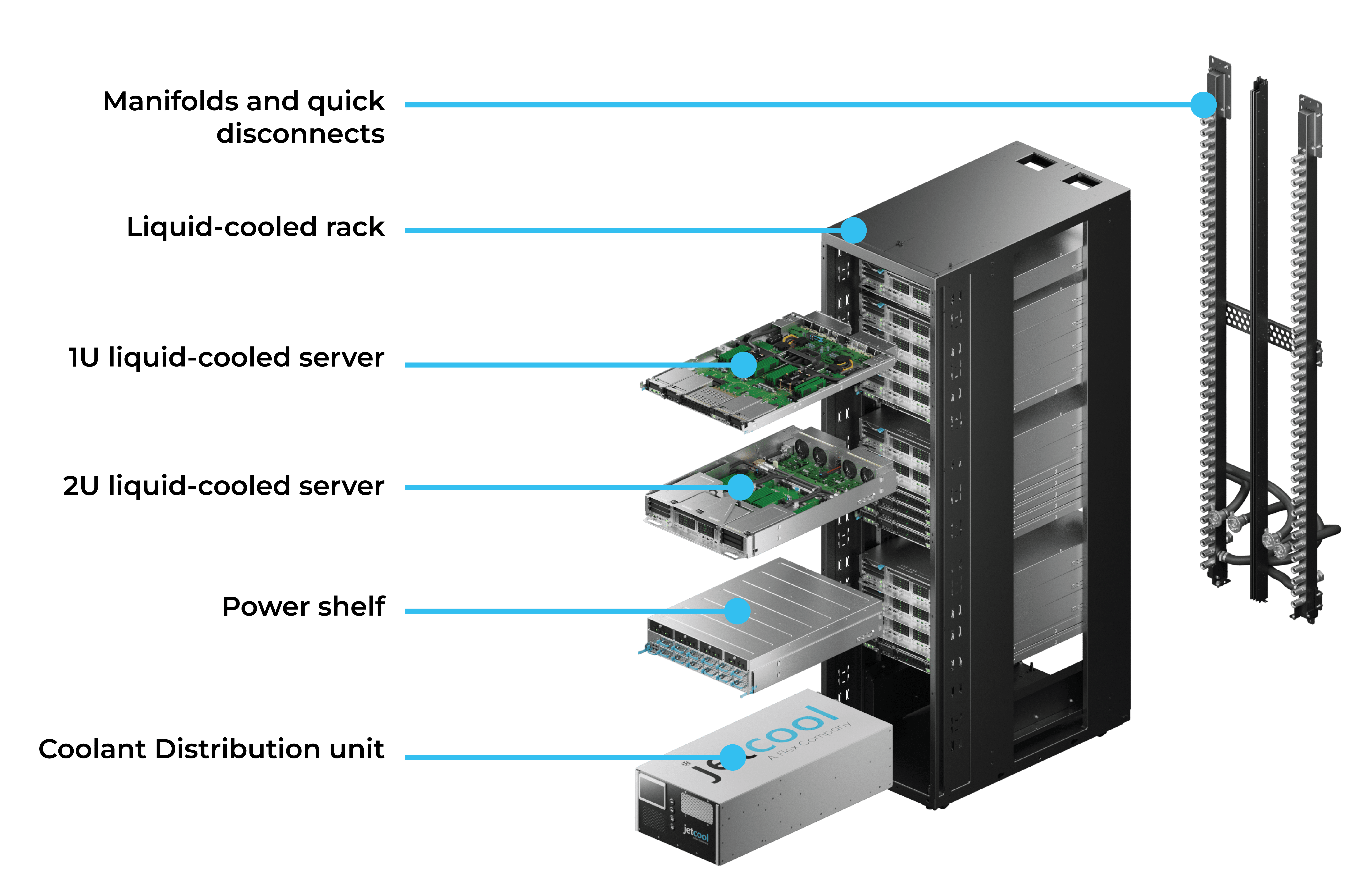

Inside the JetCool + Flex Vertically Integrated Racks

Smart Cooling at the Chip Level

Warm Water Cooling, High Delta-T Efficiency

At the rack level, the SmartSense CDU orchestrates fluid delivery with built-in telemetry, monitoring, and redundancy. It scales to 300 kW per rack and 1.8 MW per row, supporting hyperscale footprints without custom plumbing or exotic infrastructure.

Power at Scale: Integrated 800Vdc Architecture

Its high-density power shelf system provides up to 33 kW per shelf within a compact, industry-standard footprint. Designed with six power supply units in a 3+3 redundant configuration and an intelligent power shelf controller, the system achieves peak efficiency of 97.5% at half-load, ensuring reliable and energy-efficient DC power distribution.

Telemetry: Visibility from Day One

Designed for Scalable Deployment

These turnkey racks are not proof-of-concept pilots; they’re production-grade platforms already being used to power AI clusters for hyperscalers and advanced enterprise environments.

Closing the Gap Between AI Vision and Infrastructure Reality

JetCool and Flex are answering that call with a new class of turnkey racks built for the AI era. They reduce integration friction, accelerate timelines, and deliver reliable performance at scale.

In the AI age, the rack isn’t just a container for IT hardware, it’s the foundation for your compute strategy. Ready to get started? Contact us today or learn about how we can help as you migrate from air to liquid cooling.